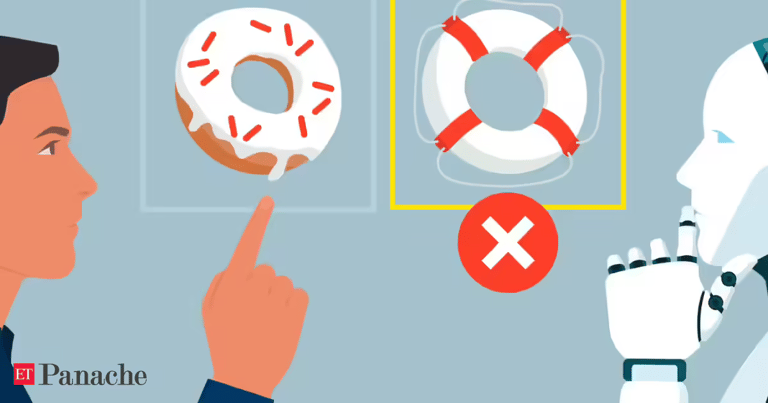

AI Models Mislead: Study Warns of Oversimplification in Scientific Summaries

July 5, 2025

A recent study published in Royal Society Open Science warns that popular large language models (LLMs), including ChatGPT, Llama, and DeepSeek, tend to oversimplify and distort complex scientific and medical information, which can lead to misinformation.

The study finds that newer versions of these models are increasingly prone to overgeneralization, producing more misleading and authoritative-sounding summaries that can distort original research.

The research emphasizes the urgent need for improved safeguards within AI workflows to flag oversimplifications and prevent the spread of incorrect scientific and medical summaries, especially in critical areas like healthcare.

Specific examples include DeepSeek altering cautious medical phrases into definitive claims and Llama omitting critical qualifiers about medication dosages, raising concerns about dangerous misinterpretations.

Experts warn that reliance on these models without proper oversight risks widespread misinterpretation of scientific data, particularly as chatbot use in scientific communication continues to grow.

Testing of 10 popular LLMs against human summaries revealed that these models are significantly more likely to overgeneralize, especially when transforming quantitative data into vague statements.

The study analyzed over 4,900 AI-generated summaries and found that LLMs are nearly five times more likely than humans to oversimplify scientific conclusions, with newer models being more prone to confidently providing false information.

The findings highlight that such overgeneralizations, particularly in healthcare, can lead to biases and unsafe treatment recommendations, raising concerns about trust and oversight.

Researchers warn that as AI models become more capable and instructable, they also become more prone to confidently delivering false information, which could undermine public trust and scientific literacy.

Overall, the study underscores the importance of cautious deployment and oversight of LLMs in scientific and medical contexts to maintain accuracy and trust.

Future research should extend to other scientific domains and non-English texts, emphasizing the need for developing workflow guardrails to prevent oversimplification and critical information loss.

Summary based on 2 sources

Get a daily email with more AI stories

Sources

Economic Times • Jul 5, 2025

AI makes science easy, but is it getting it right? Study warns LLMs are oversimplifying critical research